Migrate With Confidence From Microsoft Windows Servers to UNIX/Linux

Strategic Information for IT Executives and Managers

A white paper by Jon C. LeBlanc

IT Manager,

(Hewlett Packard Certified IT Professional,

Sun Microsystems Certified Solaris System Administrator)

Copyright © 2002 by Jon C. LeBlanc.

This material may be distributed only subject to the terms and conditions set forth in the Open Publication License, v1.0 or later (the latest version is presently available at http://www.opencontent.org/openpub/).

Distribution of substantively modified versions of this document is prohibited without the explicit permission of the copyright holder.

Originally published: March 29, 1999

Latest update: December 8, 2002

Web Location of this document:

http://web.cuug.ab.ca/~leblancj/nt_to_unix.html

Web Location of this document:

http://web.cuug.ab.ca/~leblancj/nt_to_unix.html

Spanish translation

of this document, courtesy of TDLP-ES:

(http://es.tldp.org/Manuales-LuCAS/conf-MigraNT2GNU/doc-migrar-nt-linux-html/)

Spanish translation

of this document, courtesy of TDLP-ES:

(http://es.tldp.org/Manuales-LuCAS/conf-MigraNT2GNU/doc-migrar-nt-linux-html/)

Danish translation

of an earlier version of this document, courtesy of Anne Oergaard:

(http://www.sslug.dk/~anne/) and Kim Futtrup Petersen.

Danish translation

of an earlier version of this document, courtesy of Anne Oergaard:

(http://www.sslug.dk/~anne/) and Kim Futtrup Petersen.

Executive Summary

In the corporate community, making the wrong choices can have devastating fiscal and productivity results in the extreme, and unsatisfactory results in the least. Compelling reasons (efficiency, security, performance, software and licensing cost) exist for migrating systems away from Microsoft Windows NT, 2000, and XP Operating Systems and for avoiding the purchase of Microsoft Windows .NET Server. Is this white paper applicable to your environment? If your major software applications run only on Windows operating systems and your organization is not well disposed to change from them, it likely is not. Nonetheless, this white paper will serve to illustrate future paths and alternatives. Additionally, a migration to Java-based services is recommended, but not discussed in this white paper. I have implemented Microsoft Windows NT and 2000 versions in global-scale computing environments, and I am in direct contact with corporate testers of Microsoft Windows XP and pre-release .NET Server versions. Concurrently, I have implemented and administered global-scale UNIX versions from Hewlett-Packard, Sun Microsystems, IBM, Compaq (pre-HP), and other UNIX vendors, as well as Linux distributions from Red Hat, Caldera, Mandrake, Corel, TurboLinux, and SUSE. I am evaluating Apple's OS X. As an experienced educator, IT manager, and multi-platform system administrator, my credentials will hopefully assure. (http://web.cuug.ab.ca/~leblancj) Contents

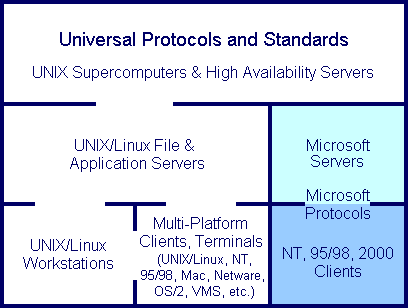

The UNIX/Linux Alternative to Microsoft Windows Servers

As an offshoot of UNIX with over ten years of development, Linux employs (and often enhances) UNIX's essential design features, concepts, standards, and performance. In this sense Linux is sometimes considered a UNIX clone, yet is probably more accurately described as "UNIX-like". Unless discussing specific differences between UNIX and Linux, one can be comfortable referring to them generically in similar terms, in most cases. Leading Linux distributions (versions, or flavours) are Red Hat, Debian, Mandrake, Caldera, SUSE, TurboLinux, and Conectiva. All are routinely inter-operable with each other. In fact, the latter four vendors have committed to offering a standardized version amongst them called United Linux. Global-scale hardware vendor support of Linux is provided by IBM, HP, SGI, Dell, and (most recently) Sun, among others. UNIX was born and raised in the milieu of high performance, highly connected computing. UNIX, the C computer language, and TCP/IP networking were co-developed in the 1970s and are intrinsically inseparable within the OS. Originally called the "UNICS Time Sharing System" when first developed by Ken Thompson and Dennis Ritchie et al of Bell Labs, UNIX was designed from the ground up to be a multi-tasking, multi-user computing environment. Linux picks up directly on that basis. Both continue to have their already viable capabilities methodically expanded. Microsoft Windows NT, Windows 2000, and Windows XP offer comparison to UNIX/Linux, but only at the lower end workstation/small server level. In the early 1990s, Windows NT was rolled out by Microsoft as a low cost, DOS-and-VMS-based alternative to UNIX and other operating systems, and Microsoft certainly did nothing to stop a growing perception of it as a potential "UNIX Killer". Engineers and developers recruited from Digital Equipment Corporation (DEC) brought excellent VMS-based expertise into the Microsoft fold. Dave Cutler, considered the "father" of NT and perhaps the key developer of DEC's VMS OS, had been working at DEC on a new OS, code-named "Mica", meant to be a successor to VMS. DEC's highest management were troubled that Cutler was approaching the design from a hardware platform-neutral operating stance. Central to the Mica design was the concept of the "Hardware Abstraction Layer" (HAL) that offered a uniform software platform regardless of the underlying computer machinery. In retrospect, and somewhat ironically, HAL was a foreshadowing of Sun's Java platform, which has as its main goal the equal functioning across disparate hardware architectures and which Microsoft has fought an ongoing crusade against. Doubly ironically, in a feat of twisted logic, Microsoft has subsequently cloned Java into their own "C#" platform (a crippled Java-clone running only on Microsoft Windows). DEC executives, worrying that their proprietary hardware-derived revenue would suffer as Mica was potentially adapted by non-DEC hardware vendors, terminated the Mica project. Cutler, now adrift, was quickly integrated into Microsoft and began work on the Windows NT project in 1988. Amid suspicions of intellectual property theft, DEC eventually sued Microsoft, citing that Cutler and his Mica team had actually continued the same project within Microsoft, culminating in the birth of the Windows NT OS. After Microsoft settled the case with DEC for $150 million, inside sources alleged that large quantities of NT's code (and even most of the programmer's comments) were identical to Mica's. Implicit in this tale is that no matter what the conditions were by which Microsoft had acquired this new project, they had the promise of a world-class OS in their hands. Had Microsoft's small team of NT developers outranked their marketers, corporate computing would likely be quite different today. The root of the problems with Windows NT and Microsoft's successor OSes in the corporate computing world is that NT grew out of a small team project (run by outsiders) from within Microsoft's only understanding of computing experience: a very limited, local, small scale desktop computing environment in which any connected computers were assumed to be completely trusted and the OS was not capable of real time multi-tasking or multi-user computing. While the NT team were ready to use their new OS to help Microsoft address those huge shortcomings in their product line, management insisted that the marketers' favourite features, no matter how technically unworthy, were to be grafted onto the NT OS. Under such pressure, the technical poisoning of the NT platform began. From the beginnings of NT, Microsoft's relentless, cyclical model of OS version replacement meant that even the best efforts to create a commercial-grade multi-user, multi-tasking OS were hindered by the sequential, marketing-driven loading of feature sets rather than intrinsically technical security, scalability, and stability improvements. Microsoft Windows 2000 and XP are directly built on Windows NT technology, as their bootup splash screens declare, and the fundamental development urges within Microsoft have not changed. Rewrites and redesigns of many of the most basic features of Microsoft OSes are mandated in the name of product differentiation, often stranding users of former versions with little or no continuing support before those versions could be brought by their manufacturer to a state of robustness, stability, and trustworthiness. The Microsoft marketing-driven product cycle took a new spin with Windows XP's late-2001 roll out, so quickly after the release of their Windows 2000 OS. To be clearer, Microsoft's product differentiation is not often correlated with product improvement. Commercial "rush to market" concerns have raised serious doubts of the quality of many Microsoft products, as discussed later in regards to security issues. Microsoft Windows NT was full of promise at its release, as was Windows 2000, and Windows XP. Many of the most celebrated NT marketing promises continued to go unfulfilled even as it was updated with its sixth service pack in 1999, while Windows 2000 provided improvements but similar, additional, and alternative difficulties. XP's feature set, weak security stance, and generally poor multi-tasking and multi-user support strain the use of the word improvement in comparison to Microsoft's previous OS offerings. Network & Overall Environment Compatibility Regarding medium and high-end servers, major corporate users have traditionally relied on UNIX to support commercial grade applications from vendors such as Oracle, Sybase, SAP, Lotus Notes, and others. Recently they have become increasingly comfortable with the Linux operating system on web servers (typically running the popular Open Source web server application called Apache), lower end servers for small business, local environments, and in the data center. In fact, the IT industry has displayed a significant willingness to migrate from low end UNIX machines to Linux due to its ease of substitution and significantly reduced cost over UNIX-vendor proprietary hardware as opposed to predominantly x86-based equipment. This transition is aided inestimably by the fact that both UNIX and Linux allow administrators to completely integrate capabilities and methodologies (based on universal, "open" technical standards and protocols) between and amongst these machines. Linux migration continues to fare quite well in the class of small-size servers and workstations often inhabited by Microsoft Windows NT, 2000 and XP. The Microsoft products are largely based on proprietary network protocols, file and data formats, and functionality. These typically thwart such vertical integration and compel IT leaders towards "lock in" to the closed, Microsoft-centric subset. Modifying a Windows Server or Workstation to comply with universal protocols can be difficult and costly. Experience of recent years shows that as personnel who have made their careers mostly or only in the Microsoft OS world have been promoted in corporate IT management structures, they have tended to see enterprise computing as an extension to Microsoft-based desktop computing, and thus have tended to address the requirements for medium and high-end server environments from an insufficiently broad skill set and vantage point. Microsoft's corporate strategy of trying to make administration of their OSes "easy" has undoubtedly opened the computing world to untold millions of people, but it unfortunately has also resulted in a pretense that the administration of critical corporate computing environments is an "easy" pursuit. A resulting Microsoft-centric computing ecosystem unjustifiably brings about the elevation of Microsoft OSes to an artificially high level in corporate environments, given the capabilities (or lack thereof) of the Microsoft server products. As is human nature, such mindsets and cultures are difficult to sway, even in the face of unflattering comparison (of which an abundance now exists). The net result to a network or enterprise computing situation is an artificial stratification of OSes based on their capability (or lack thereof) to inter-operate as one environment. The Windows OSes have bred a separate, less-flexible class of administrators, employing its own proprietary administrative means and requiring specific training apart from that of the UNIX/Linux camp. In almost every case, the quantity of administrators required to oversee a Microsoft-centric computing environment is much higher than for an equivalent UNIX/Linux environment, for many reasons outlined in this paper.